安装Python环境

使用虚拟环境并安装依赖

apt install python3-pip

apt install python3-venv

python3 -m venv venv

source venv/bin/activate

python3 -m pip install modelscope -i https://pypi.tuna.tsinghua.edu.cn/simple下载模型

可以去魔搭社区找模型

https://modelscope.cn/

比如qwen3的这个视觉模型,可以理解图片

https://modelscope.cn/models/Qwen/Qwen3-VL-2B-Instruct

使用以下命令下载modelscope download --model Qwen/Qwen3-VL-2B-Instruct

下载完之后可以在本地查看ls ~/.cache/modelscope/hub/models/Qwen/Qwen3-VL-2B-Instruct/

安装nvidia runtime

curl -fsSL https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/3bf863cc.pub | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/nvidia-cuda.gp

echo "deb [signed-by=/etc/apt/trusted.gpg.d/nvidia-cuda.gpg] https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/ /" | sudo tee /etc/apt/sources.list.d/nvidia-cuda.list

nvidia-ctk runtime configure --runtime=docker启动模型服务

docker run -d --runtime nvidia --gpus all --name Qwen3-VL-2B-Instruct -v /root/.cache/modelscope/hub/models/:/vllm-workspace -p 8000:8000 --ipc=host --entrypoint vllm vllm/vllm-openai:latest serve Qwen/Qwen3-VL-2B-Instruct --gpu-memory-utilization 0.8 --quantization fp8 --max-model-len 4096 --enable-auto-tool-choice --tool-call-parser hermes

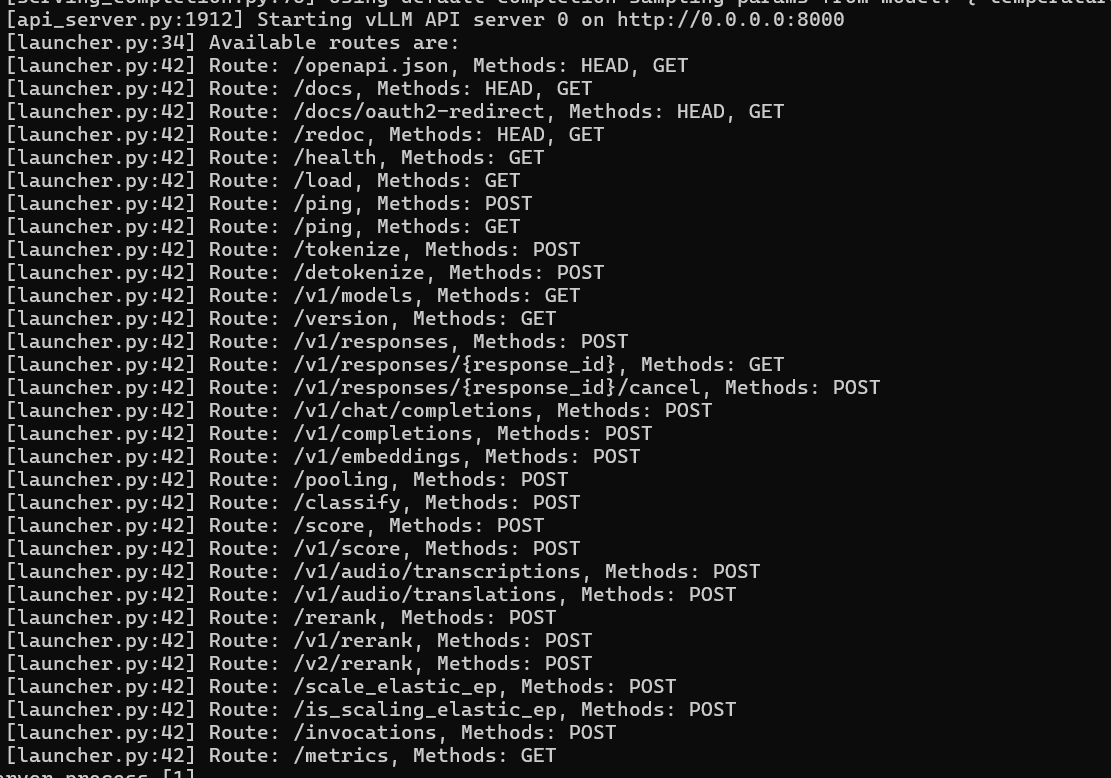

查看日志

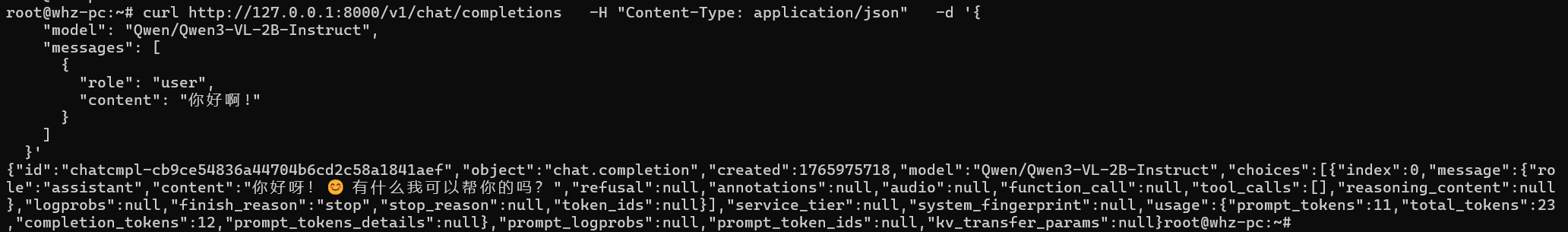

测试

curl http://127.0.0.1:8000/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "Qwen/Qwen3-VL-2B-Instruct",

"messages": [

{

"role": "user",

"content": "你好啊!"

}

]

}'

参考

apt install python3-pip

apt install python3-venv

python3 -m venv venv

source venv/bin/activate

python3 -m pip install modelscope -i https://pypi.tuna.tsinghua.edu.cn/simple

modelscope download --model deepseek-ai/DeepSeek-R1-0528-Qwen3-8B

ls ~/.cache/modelscope/hub/models/deepseek-ai/

# 安装nvidia runtime

curl -fsSL https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/3bf863cc.pub | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/nvidia-cuda.gp

echo "deb [signed-by=/etc/apt/trusted.gpg.d/nvidia-cuda.gpg] https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2404/x86_64/ /" | sudo tee /etc/apt/sources.list.d/nvidia-cuda.list

nvidia-ctk runtime configure --runtime=docker

# 启动

docker run -d --runtime nvidia --gpus all --name deepseek -v /root/.cache/modelscope/hub/models/:/root/.cache/modelscope

/hub/models -p 8080:8080 --ipc=host --entrypoint tail vllm/vllm-openai:latest -f /dev/null

exec -it

0.6表示使用总GPU的多少,0.6 * 8G = 4.8G

vllm serve /root/.cache/modelscope/hub/models/deepseek-ai/DeepSeek-R1-0528-Qwen3-8B --gpu-memory-utilization 0.6

# 或者

docker run -d --runtime nvidia --gpus all --name Qwen3-VL-2B-Instruct -v /root/.cache/modelscope/hub/models/:/vllm-workspace -p 8000:8000 --ipc=host --entrypoint vllm vllm/vllm-openai:latest serve Qwen/Qwen3-VL-2B-Instruct --gpu-memory-utilization 0.8 --quantization fp8 --max-model-len 4096 --enable-auto-tool-choice --tool-call-parser hermes

docker run -d --runtime nvidia --gpus all --name Qwen3-Embedding-0.6B -v /root/.cache/modelscope/hub/models/:/vllm-workspace -p 8001:8000 --ipc=host --entrypoint vllm vllm/vllm-openai:latest serve Qwen/Qwen3-Embedding-0___6B --gpu-memory-utilization 0.6 --quantization fp8 --max-model-len 1024

docker run -d --runtime nvidia --gpus all --name Qwen3-Reranker-0.6B -v /root/.cache/modelscope/hub/models/:/vllm-workspace -p 8002:8000 --ipc=host --entrypoint vllm vllm/vllm-openai:latest serve Qwen/Qwen3-Reranker-0___6B --gpu-memory-utilization 0.6 --quantization fp8 --max-model-len 1024 --hf_overrides '{"architectures": ["Qwen3ForSequenceClassification"], "classifier_from_token": ["no", "yes"], "is_original_qwen3_reranker": true}' --trust-remote-code --served-model-name Qwen3-Reranker-0.6B